Beyond the Platform:

Guilds Are Back. The AI Revolution No One Saw Coming

Executive Summary: Rent Cognition or Own It?

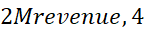

For decades, low-margin industries (restaurants, farms, trucking) rationally avoided digitization—platform fees and IT failures threatened survival on 3-5% margins. Now, AI collapses build costs and coordination friction, enabling a third path beyond platforms or franchises: AI-powered guilds. These cooperatives pool resources to deploy sovereign edge-compute stacks, transforming operational data into a defensible “context moat.” As hyperscalers and foundation models squeeze platforms into irrelevance, and energy constraints favor distributed architectures, guilds offer 2–3× margin expansion through aligned incentives, payments leverage, and waste reduction. The future belongs not to digital landlords, but to operators who own their cognition.

Key Takeaways

The Squeeze Is On: Platforms (Toast, Square) face disintermediation as hyperscalers (AWS) and model builders (OpenAI) vertically integrate, eroding their value.

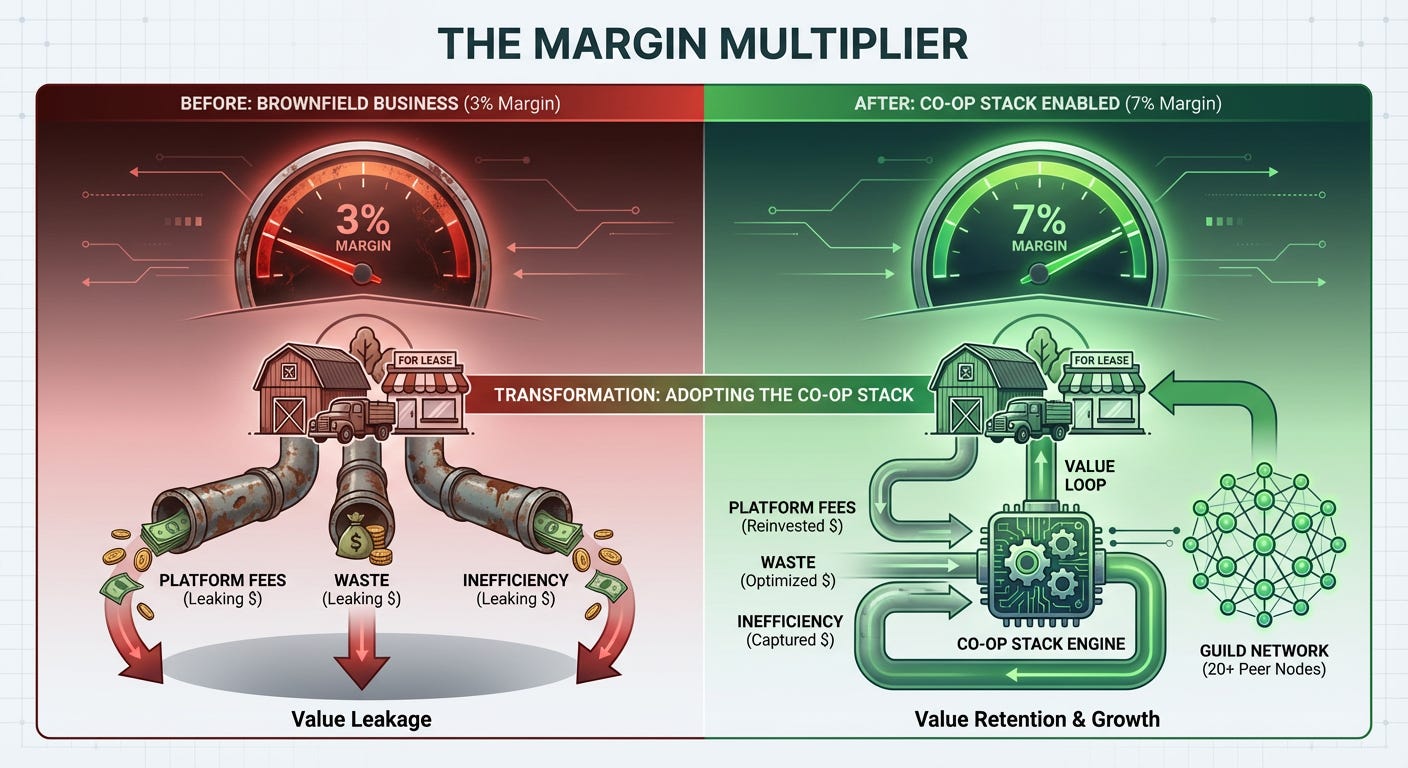

The Guild Advantage: 40–50 operators pooling $6K/location can deploy:

Heterogeneous edge compute (20× cheaper than cloud GPUs)

Federated learning (context depth > generic scale)

Data trusts (antitrust-safe collaboration)

Incentives Align, Margins Expand:

Payments savings (40–80 bps)

Waste reduction (5–7% of COGS)

Labor optimization (1–2% efficiency)

→ 4% margins → 7–9% (Gulf Coast case: $67K–130K/location over 3 years).

Energy Is the New Bottleneck: Grid constraints favor edge deployments with behind-the-meter renewables, not GPU-locked data centers.

Value Migration Phases:

Now: Compute/energy owners win (NVIDIA).

3–7 yrs: Coordination protocols dominate (federated learning standards).

8–12 yrs: Guilds with deepest operational integration thrive.

The Ultimate Choice: Rent cognition from platforms or own intelligence through guilds. Sovereignty wins.

Devansh’s recent piece on AI transforming low-tech industries nails why brownfield digitization is finally economically viable. His insight that these sectors weren’t “behind” or “luddites,” but rather rationally avoiding IT driven suicide is exactly right. When you’re operating on 3-5% margins and enterprise software historically carried severe cost overruns—the Standish Group’s 1990s CHAOS reports showed ~189% average overruns; more recent McKinsey/Oxford 2012 studies still found ~45% average with heavy-tail catastrophic failures—the clipboard isn’t ignorance, it’s survival.

His Digital Viability Index (DVI) elegantly captures what most tech analysts miss: the sectors most desperate for transformation (agriculture with single-digit net margins and extreme volatility, food service, construction, trucking) are precisely where previous digitization attempts died hardest. And his identification of the new enabling stack, AI collapsing build costs, edge computing providing deterministic reliability, cheap inference models, adaptive agentic systems. Explains why now is genuinely different.

But I want to build on his analysis in a direction that reveals something more interesting: what if the binary he describes—Toast versus Square, franchise versus independent, platform versus operator—isn’t the real choice at all?

What if we’re witnessing a fundamental restructuring of where value lives in the AI era? And what if that enables organizational forms we thought capitalism had permanently displaced?

Let me explain what I mean.

The Squeeze: Distribution Is No Longer the Bottleneck

In the web/mobile era (2000-2020), value accrued to the platform and aggregation layer. Infrastructure became commoditized. Applications competed into razor margins. But platforms—Facebook, Uber, Airbnb—captured enormous value sitting between users and providers because marginal costs approached zero, distribution was the bottleneck, and network effects compounded.

But look at where margins actually are in the AI economy:

NVIDIA: 63-75% gross margins (fiscal 2024-2025, varying by product mix and GAAP vs. non-GAAP accounting)

OpenAI: Spending billions annually on compute and model R&D; losses subsidized by hyperscalers and investors (public financials undisclosed; press estimates vary from 7B - 13B annually)

Application platforms: Contested and shrinking (competing on features)

The bottleneck shifted from informational scarcity (who has users and data) to physical scarcity (who has compute and energy). This isn’t minor—it’s a fundamental restructuring of where power lives.

Meanwhile, the application layer is getting crushed from both directions:

From below: Hyperscalers (AWS, Azure, Google) are vertically integrating from compute through applications—Bedrock, custom chips, integrated AI services.

From above: Foundation builders (OpenAI, Anthropic) are moving down—GPTs, direct-to-consumer experiences, operational workflows.

The platforms Devansh describes—Toast, Square, Salesforce—are being compressed into a zero-margin compatibility layer, reselling hyperscaler compute and foundation model capabilities with integration glue. When the “platform advantage” you’re supposed to fear is itself just a middleman about to be disintermediated, the strategic landscape changes completely.

The Cooperative Path Nobody’s Talking About

While everyone analyzes which platform will dominate, something entirely different is emerging in the harshest possible environment for distributed models.

Captive insurance—where companies pool together and self-insure—is the fastest-growing segment of the insurance industry. This shouldn’t work: massive capital requirements, extreme regulatory complexity, actuarial sophistication barriers, century-old incumbent moats. Yet it’s thriving because traditional insurance became unaffordable, and pooling risk was the only economically viable path.

This isn’t isolated. Agricultural cooperatives (Ocean Spray, Land O’Lakes, Sunkist collectively representing billions in revenue), credit unions and their service organizations (CO-OP Financial Services), medical group purchasing organizations like Vizient and Premier ($300B+ market)—the pattern repeats: when margin pressure is extreme and operational context is similar, distributed cooperation outcompetes traditional hierarchies.

A single restaurant paying Toast 1,980/year for the SaaS layer alone, but that’s not where the real cost lives. The larger extraction happens in payments economics: platforms typically capture 40-100 basis points above actual interchange, assessment, and processor costs. On1.5M annual card volume of a typical SMB restaurant - 6,000-$15,000/year flowing to the platform.

Fifty restaurants could pool resources differently. But how do you actually make this work?

The Governance Problem (And How AI Solves It)

Traditional cooperatives failed not because coordination was expensive, but because they couldn’t resolve conflicts, prevent free-riding, or make timely decisions. This is where skeptics will attack hardest.

AI changes this through three practical mechanisms, informed by Ostrom’s principles for governing commons:

1. Automated Contribution Tracking

Contribution becomes computational and measurable:

Every transaction generates trackable data (with member consent)

Model improvements from your operational feedback are quantifiable via secure aggregation and contribution scoring (analogous to Shapley value proxies)

Computational resources are precisely metered

Quality signals (error rates, waste reduction, customer satisfaction) are automatically captured

Your ownership stake and voting weight adjust based on actual contribution, measured in real-time. Restaurant A processes 10,000 transactions monthly with rich feedback. Restaurant B processes 2,000 and rarely corrects. Restaurant A’s voting weight adjusts proportionally. No committee. No politics. Just auditable math.

2. Computational Mediation

Most decisions aren’t strategic but operational—resource allocation, model deployment, quality control.

AI handles this:

Automated scheduling based on demand forecasting

A/B testing determines which updates deploy

Anomaly detection auto-escalates only when needed

Standard disputes use pre-agreed automated arbitration

80% of governance is operational routine. AI handles routine; humans handle strategic decisions quarterly. An inverse 80/20 rule

3. Transparent, Auditable Everything

Every decision can be logged immutably, verified by any member (open source code, transparent algorithms), contested through defined appeals, and reviewed by elected oversight. This makes bad behavior detectable and costly in ways manual bookkeeping never could.

Critical caveat on data sharing: When members are competitors, information sharing risks price signaling. The solution: establish a separate data trust as fiduciary entity, implement technical controls (secure aggregation, differential privacy, tiered access), follow safe-harbor frameworks for joint purchasing and benchmarking, and maintain competition counsel. This isn’t theoretical—medical GPOs and agricultural cooperatives have navigated this for decades.

For hard problems (member exits, strategic pivots, bad actors), you need hybrid governance. AI handles execution; humans handle strategy and exceptions. The difference: 90% of day-to-day friction is automated, letting human governance focus on the 10% requiring judgment.

The bar isn’t perfection—it’s better than alternatives: platform dictatorships (Toast changes fees unilaterally) or franchise hierarchies (corporate sets all rules). Cooperative governance is messy but accountable.

Why This Works Now

AI changes three things:

First: Coordination costs collapse—what required committees and quarterly meetings now happens through AI-mediated governance for 90% of operational decisions.

Second: The free-rider problem inverts, but with enforcement. When the asset is operational intelligence that improves through use, free-riding hurts you—but only if improvements are gated by attested contribution. Using federated learning with secure aggregation plus contribution scoring ensures improvements accrue to participating nodes after verified participation. This isn’t wishful thinking—Google’s Gboard uses cross-device federated learning at scale; healthcare pilots (MELLODDY in pharma, UK NHS federated radiology) demonstrate cross-silo FL with privacy preservation works in practice.

Third: Context becomes the moat. Toast must serve millions with generic intelligence. A cooperative optimizes ruthlessly for members’ specific context. For operational tasks, depth beats breadth.

Defending the Moat

Fair challenge: couldn’t platforms just build “seafood restaurant mode”?

Three reasons this is harder than it seems:

1. The Operational Context Barrier

Platform data is sparse (passive users), noisy (wildly different contexts), and adversarial (restaurants compete, withhold insights).

Cooperative data is dense (every member improves the system), contextual (similar profiles), and collaborative (members benefit from each other’s improvements).

Toast sees “restaurant X orders 50 lbs salmon weekly.” The cooperative knows “our restaurants waste drops 15% ordering Monday/Thursday not Wednesday/Friday because of spoilage patterns across our specific supply chain we collectively negotiated.”

Platforms observe; cooperatives co-evolve.

2. Incentive Alignment

When Toast builds a feature improving margins 2%, the restaurant makes slightly more money and Toast eventually raises prices to capture value. Restaurants rationally stop sharing improvements.

In cooperatives, improvements benefit all members proportionally with no rent extraction. Rational behavior is continuous improvement. This creates compounding divergence.

3. Architectural Lock-In

Platforms must standardize—their optimization target remains ARR and generic capability. They cannot openly share cross-customer learnings that advantage one competitor over another without channel conflict, and they must standardize tooling to control COGS.

Cooperatives can hard-specialize: custom deployments, fine-tuned models for their exact profile, optimized hardware. Their business model depends on member success, not subscriber growth.

Anticipated counter: “But platforms aggregate more data and will eventually match cooperative depth through sheer scale.”

Rebuttal: The moat isn’t data volume but context density plus aligned incentives. A platform serving 100,000 restaurants has shallow data on each. A cooperative serving 50 similar restaurants has deep, continuously-refined operational intelligence with full member cooperation. The mystery isn’t the base model—it’s the collectively refined operational intelligence that can’t be reconstructed by observation alone.

The Bootstrapping Challenge

Pooling $400K across 50 margin-compressed restaurants is hard. Three plausible paths:

Path 1: Vendor-Catalyzed Consortia

Hardware vendors face fragmented markets. Creating cooperatives is go-to-market strategy. NVIDIA, AMD, or edge compute manufacturers could offer turnkey deployment packages—hardware, installation, base models, training—at bulk discounts with gradual transition to cooperative ownership. Not charity—customer aggregation creating stable long-term customers for hardware refresh cycles.

Path 2: Industry Association Evolution

Existing associations (state restaurant associations, farm bureaus, trucking councils) could negotiate bulk deals, provide legal templates, run pilots. Many have 1,000+ members; modest per-member fees fund pilots demonstrating viability.

Path 3: Franchise Defection

Franchisees with adversarial relationships to franchisors could pool AI infrastructure outside corporate control. They own the intelligence layer while paying franchise fees for brand. Over time, power shifts from franchisor (controls brand) to franchisee collective (controls operational intelligence).

The common thread: Cooperatives need catalysts. But once one succeeds demonstrating margin improvement, diffusion accelerates.

Platform Counter-Strategies (And Why They’re Insufficient)

“Sovereign Cloud”: Run our software on your hardware—but software’s still proprietary. Not actual sovereignty.

Temporary Price Cuts: Subsidize heavily—but platforms can’t win wars of attrition when cooperatives have no burn rate and members own infrastructure.

Lobby Against Cooperatives: Most dangerous strategy. Regulatory capture is real. But cooperatives position as small business protection (politically popular), data sovereignty (privacy advocates), competition preservation (anti-trust).

Acquire Enabling Tech: Too late—federated learning frameworks, edge deployment tools, and governance software are increasingly open source.

Anticipated counter: “Edge is a reliability and security nightmare compared to centralized cloud.”

Rebuttal: Cooperatives can centralize SRE and security operations (analogous to credit union CUSOs—Credit Union Service Organizations), enforce golden images, mobile device management (MDM), signed updates, and secure enclaves. Offline-first architecture and local control actually reduce single-point cloud failures. The distributed system is more resilient, not less, when properly operated.

A Concrete Example: The Gulf Coast Seafood Cooperative

The Setup: 40 independent seafood restaurants (Houston, New Orleans, Mobile). Average 80K profit). Similar challenges: 15-20% spoilage, variable supply, pricing volatility, labor turnover.

Formation (Year 0): Gulf States Restaurant Association convenes owners. Vendor-catalyzed consortium offers turnkey deployment: 6K per location).

Stack:

Edge: x86/ARM mini-PCs with on-die NPU (

3,500/site); vision accelerators (Hailo/Coral for spoilage detection); managed via MDM (Mender/FleetDM) and k3s

Serving: vLLM for small language models, ONNX/TFLite for vision; event bus (MQTT); feature store at edge with periodic secure sync

Federated Learning: Flower/FedML with secure aggregation and differential privacy; attestation of participation for update eligibility

Observability: OpenTelemetry; SLOs on inference latency, drift, and safety

Safety: Policy engine (OPA), PII minimization, role-based prompts/tools

Operations (Year 1-2): Systems learn optimal ordering, labor scheduling, supplier negotiations. Federated learning propagates improvements. Automated governance handles compute/models. Human governance: quarterly meetings, 7-member elected board. KPIs tracked: food waste %, forecast variance, labor utilization, safety incidents, energy per inference.

Economics (Realistic TCO over 3 years):

Baseline Platform Costs (per location):

SaaS/POS suite: $2k-5k/yr

Payments extraction: 40-80 bps above actual costs on 1.5M card volume = 6k-12k/yr

Total per location: $8k-17k/yr

40 locations × 3 years = 960k- 2M

Cooperative Costs:

Edge kit: 100-140k (40 sites x 2.5k-3.5k)

Central infrastructure: $75k-100k

Engineering/SRE: 2-3 FTEs blended 180k/year x 3 years = 540k-$810k

Software/API: 60-180k over 3 years

Total: 775k - 1.23M over 3 years

Value Capture:

Payments savings: 40-80 bps on 180M aggregate (40 x 1.5M × 3 years) = 720k - 1.44M

Food waste reduction: 7% of COGS improvement on ~ 800k/location x 40 x 3 years = 2.5M-$3.5M (at 30-35% food cost)

Labor efficiency: 1-2% improvement on ~ 600k/location labor x 40 x 3 years = 720k-$1.44M

Total value capture: 3.94M - 6.38M over 3 years

Net benefit: 2.7M - 5.2M over 3 years, or 67k - 130k per location

Result: 4% net margin → 7-9% net margin. Not “10-20x” but doubling margins, which is transformational for survival and competitive positioning.

The Moat: System knows “Gulf Coast seafood restaurant” patterns Toast’s generic system doesn’t. New restaurants joining immediately benefit from 3 years collective learning. Building independently costs $150K+ and 2 years. Joining is rational; staying independent is expensive.

90-day pilot outcomes: Initial spoilage alerts working, baseline KPIs established, member training complete, first federated learning cycle tested.

12-month KPI targets: 3-5% food waste reduction, 5-8% labor efficiency improvement, 20-40 bps payments savings demonstrated, zero unplanned downtime >4 hours.

The Physics of Compute

We’re not in the GPU-only future everyone assumes. Heterogeneous computing means:

Vision: NPUs (5W power for spoilage detection)

Time-series forecasting: FPGAs (deterministic, low latency for inventory)

Customer interaction: Small language models on CPUs

Complex optimization: Monthly API calls to Claude/GPT

Acknowledged friction: Developer ops and toolchains for heterogeneous systems are complex. Cooperatives mitigate this by pooling specialized MLOps talent and standardizing on a curated kit (Hailo/Coral for vision, x86/ARM with NPUs for SLMs), effectively creating shared engineering capacity that individual operators couldn’t afford.

Total inference cost: ~$40/month per restaurant

Equivalent cloud GPU: ~$800/month

The heterogeneous approach is 20x cheaper for these specific tasks with better task-specific performance. Platform vendors can’t do this—must standardize across millions of sites. Optionality itself is the moat.

Energy and Locality Are Shaping Architecture

Hyperscalers are contracting directly for nuclear, geothermal, and other firm clean power:

Microsoft’s power purchase agreement with Helion for fusion energy

Google’s contract with Fervo for geothermal

Amazon’s data center campus adjacent to Talen nuclear facility at Susquehanna

Singapore imposed data center moratorium (2019-2022), now permits only with strict efficiency/clean-power criteria per IMDA/JTC guidance

Ireland faces connection constraints in Dublin with CRU/EirGrid notices creating de facto prioritization for limited grid capacity

The PUE trade-off (acknowledging hyperscale advantage):

Hyperscale data centers achieve PUE (Power Usage Effectiveness) of ~1.1-1.2 through sophisticated cooling, power distribution, and load optimization. Edge deployments typically have worse PUE—often 1.5-2.0.

But the argument isn’t PUE. The argument is system-level efficiency:

Avoided data movement: Moving image/sensor data to cloud consumes far more energy than local inference

Latency and availability: Physical operations can’t tolerate cloud round-trips or outages

Co-location with behind-the-meter renewables: Restaurants/farms can deploy solar, use waste heat, schedule workloads during optimal power windows

Distributed scheduling: Cooperatives can optimize across heterogeneous fleet based on real-time energy availability and cost

Back-of-envelope per-operation comparison:

Centralized GPU cloud: ~2-3 Wh per complex inference including data movement from edge to cloud and back

Heterogeneous edge (NPU for vision, FPGA for time-series): ~0.02-0.05 Wh for equivalent specific tasks with zero data movement

For continuous inference on local sensor/operational data, distributed architecture can be 40-150x more energy efficient for these specific workloads, even accounting for worse PUE.

As grid constraints tighten and carbon regulation increases, system-level efficiency and energy access favor distributed architectures for operational AI workloads.

The Return of the Guild (And Why It’s Different)

Medieval guilds controlled knowledge transfer, quality standards, market access, tool sharing, and risk pooling. They collapsed because industrial capitalism needed labor mobility and knowledge commodification.

But guilds also failed because they: restricted entry (hereditary), suppressed innovation (resisted new methods), limited scale (local only), coordinated at human speed (couldn’t adapt).

AI cooperatives—which I’m calling guilds to denote member-owned entities jointly controlling compute, continuously-improving operational intelligence, and membership-gated learning commons—avoid these failures:

Open entry: Any qualifying restaurant joins (operational standards, not heredity). Contribution-weighted governance. Exit rights preserved.

Innovation: Federated learning allows local experimentation. Successful innovations propagate automatically. Failed experiments don’t crash system.

Scale: Local guilds interoperate. Shared protocols (OPC UA for manufacturing, FHIR for healthcare, IDS/GAIA-X for data spaces can serve as Phase 2 coordination substrate exemplars).

Machine-speed coordination: Operational decisions automated. Strategic decisions quarterly.

Digital coordination removes constraints that made guilds inefficient, while preserving collective ownership that made them resilient.

The Three-Phase Value Migration

Phase 1 (Present - 5 years): Compute/Energy Capture

Value to: NVIDIA, AMD, silicon vendors, power infrastructure, edge hardware. The brake: Energy infrastructure moves at bureaucratic speed in US. China likely compresses to 3 years through centralized planning.

Phase 2 (3-7 years): Coordination Infrastructure

Value to: Federated learning protocols, human-AI standards, operational frameworks, next-gen programming (MOJO, machine-to-machine protocols like TriM2M).

Why compression: AI accelerates technical coordination—protocol testing, framework design, governance iteration at machine speed.

What this doesn’t bypass: Trust between members (human speed), regulatory evolution (legislative timescales), security vulnerabilities, emergent coordination failures, cultural resistance.

Phase 3 (8-12 years): Ecosystem/Community Depth

Value to: Cooperatives with deepest integration, communities with most sophisticated coordination, networks with richest adaptation.

The Critical Nuance: AI accelerates technical iteration but not trust-building, regulatory evolution, or institutional legitimacy. New bottlenecks emerge: machine-speed outpacing oversight, automated governance optimizing wrong objectives, protocol evolution creating compatibility chaos.

Phases overlap significantly. Transitions messy and non-linear. We’re compressing timelines by reducing certain friction while creating new constraints. These are estimates with high uncertainty.

A Guild Playbook: Making This Concrete

Legal Structure:

Form as cooperative or mutual benefit corporation

Establish separate data trust as fiduciary entity governing data access/rights

Clear exit/portability policy in founding documents

Governance:

One-member/one-vote for standards and strategic decisions

Weighted dividends via contribution scoring (data quality, uptime, performance improvements)

Publish Ostrom-style design principles adapted for AI commons

Economic Model:

Centralized SRE and model engineering team (co-op-owned, 2-3 FTEs for 50-member guild)

Joint procurement for payment processing and energy

Member fee = marginal opex + modest reserve; dividends paid from realized savings vs baseline

5-7 year capital amortization with planned refresh cycles

Standardization Strategy:

Curated kit approach reduces toolchain complexity

Golden images and signed updates enforced via MDM

Shared MLOps capacity individual operators couldn’t afford

Success Metrics:

Food waste %, forecast variance, labor utilization, safety incidents

Energy per inference, FM API spend share

Member retention and satisfaction

New member adoption rate

Where This Goes

Devansh is right about pain points, enabling technology, and near-term adoption. But the long-term equilibrium looks different than digital feudalism.

When the first cooperative demonstrates margin doubling, when guilds show energy sovereignty enabling compute independence, when operational intelligence emerges that platforms can’t match—the conversation shifts.

From “Which platform landlord?” to “How do we build sovereignty through cooperative ownership?”

We’re not just digitizing low-tech industries. We’re potentially restructuring economic organization in the physical economy.

Medieval guilds controlled knowledge, tools, and standards. Industrial capitalism dissolved them because scale required mobility and commodification. But if AI enables coordination at scale while preserving local control, if heterogeneous compute enables optionality over standardization, if energy constraints favor distribution over centralization...

Then what once was might return anew. Not from nostalgia, but because the physics of intelligence and energy have shifted enough to make different organizational forms economically viable.

A Final Thought

Devansh asks: “Are we getting into this, or do you hate money?”

I’d reframe: Are we choosing sovereignty or capture?

The platform path is clear and likely profitable for early movers. But the cooperative path might offer something more valuable: actual ownership of the intelligence that runs your operation.

For brownfield operators who’ve survived on clipboard-and-chaos for forty years, who’ve watched enterprise software promise transformation and deliver bankruptcy, who operate on margins so thin one bad decision is fatal—the question isn’t academic.

Do we rent cognition, or do we own it?

The answer will determine not just which vendors win, but what the economic landscape looks like when intelligence becomes infrastructure.

Devansh has shown why transformation is inevitable and how the technology works. The question of who owns it when the dust settles is still open.

And that’s a conversation worth having.

Outstanding anlaysis on cooperative economics in the AI era. The federated learning architecture you describe is spot-on for operational intelligence, where context density matters far more than data volume. What's often overlooked is how secure aggregation plus contribution scoring solves the free-rider problme that killed earlier cooperative attempts. The Gulf Coast seafood example is particularly compeling, showing how heterogenous edge compute can be 20x cheaper than cloud GPUs for specific operational tasks while building a context moat platforms simply can't replicate.